Abstract

Digital reconstruction of neuronal cell morphology is an important step toward understanding the functionality of neuronal networks. Neurons are tree-like structures whose description depends critically on the junctions and terminations, collectively called critical points, making the correct localization and identification of these points a crucial task in the reconstruction process. Here we present a fully automatic method for the integrated detection and characterization of both types of critical points in fluorescence microscopy images of neurons. In view of the majority of our current studies, which are based on cultured neurons, we describe and evaluate the method for application to two-dimensional (2D) images. The method relies on directional filtering and angular profile analysis to extract essential features about the main streamlines at any location in an image, and employs fuzzy logic with carefully designed rules to reason about the feature values in order to make well-informed decisions about the presence of a critical point and its type. Experiments on simulated as well as real images of neurons demonstrate the detection performance of our method. A comparison with the output of two existing neuron reconstruction methods reveals that our method achieves substantially higher detection rates and could provide beneficial information to the reconstruction process.

Keywords: Neuron reconstruction, Junction detection, Bifurcation detection, Termination detection, Fuzzy logic, Fluorescence microscopy, Image analysis

Introduction

The complexity and functionality of the brain depend critically on the morphology and related interconnectivity of its neuronal cells (Kandel et al. 2000; Ascoli 2002; Donohue and Ascoli 2008). To understand how a healthy brain processes information and how this capacity is negatively affected by psychiatric and neurodegenerative diseases (Anderton et al. 1998; Lin and Koleske 2010; Ṡiṡková et al. 2014) it is therefore very important to study neuronal cell morphology. Advanced microscopy imaging techniques allow high-sensitivity visualization of individual neurons and produce vast amounts of image data, which are shifting the bottleneck in neuroscience from the imaging to the data processing (Svoboda 2011; Peng et al. 2011; Senft 2011; Halavi et al. 2012) and call for a high level of automation. The first processing step toward high-throughput quantitative morphological analysis of neurons is their digital reconstruction from the image data. Many methods have been developed for this in the past decades (Meijering 2010; Donohue and Ascoli 2011) but the consensus of recent studies is that there is still much room for improvement in both accuracy and computational efficiency (Liu 2011; Svoboda 2011).

A key aspect of any neuron reconstruction method is the detection and localization of terminations and junctions of the dendritic (and axonal) tree, collectively called “critical points” in this paper (Fig. 1), which ultimately determine the topology and faithfulness of the resulting digital representation. Roughly there are two ways to extract these critical points in neuron reconstruction (Al-Kofahi et al. 2008; Meijering 2010; Basu et al. 2013). The most often used approach is to start with segmentation or tracing of the elongated image structures and then to infer the critical points, either afterwards or along the way, by searching for attachments and endings in the resulting subsets (Dima et al. 2002, Xiong et al. 2006, Narro et al. 2007, Vasilkoski and Stepanyants 2009, Bas and Erdogmus 2011, Chothani et al. 2011, Dehmelt et al. 2011, Ho et al. 2011, Choromanska et al. 2012, Xiao and Peng 2013). This approach depends critically on the accuracy of the initial segmentation or tracing procedure, which usually is not designed to reliably capture critical points in the first place and thus often produces very fragmented results, requiring manual postprocessing to fix issues (Peng et al. 2011; Luisi et al. 2011; Dercksen et al. 2014). The reverse approach is to first identify critical points in the images and then to use these as seed points for tracing the elongated image structures. Critical points can be obtained either by manual pinpointing, as in semiautomatic tracing methods (Meijering et al. 2004, Schmitt et al. 2004, Narro et al. 2007, Lu et al. 2009, Peng et al. 2010, Longair et al. 2011), or by fully automatic detection using sophisticated image filtering and pattern recognition methods (discussed in the next section). The latter approach has barely been explored for neuron reconstruction, but if reliable detectors can be designed, they provide highly valuable information to the reconstruction process.

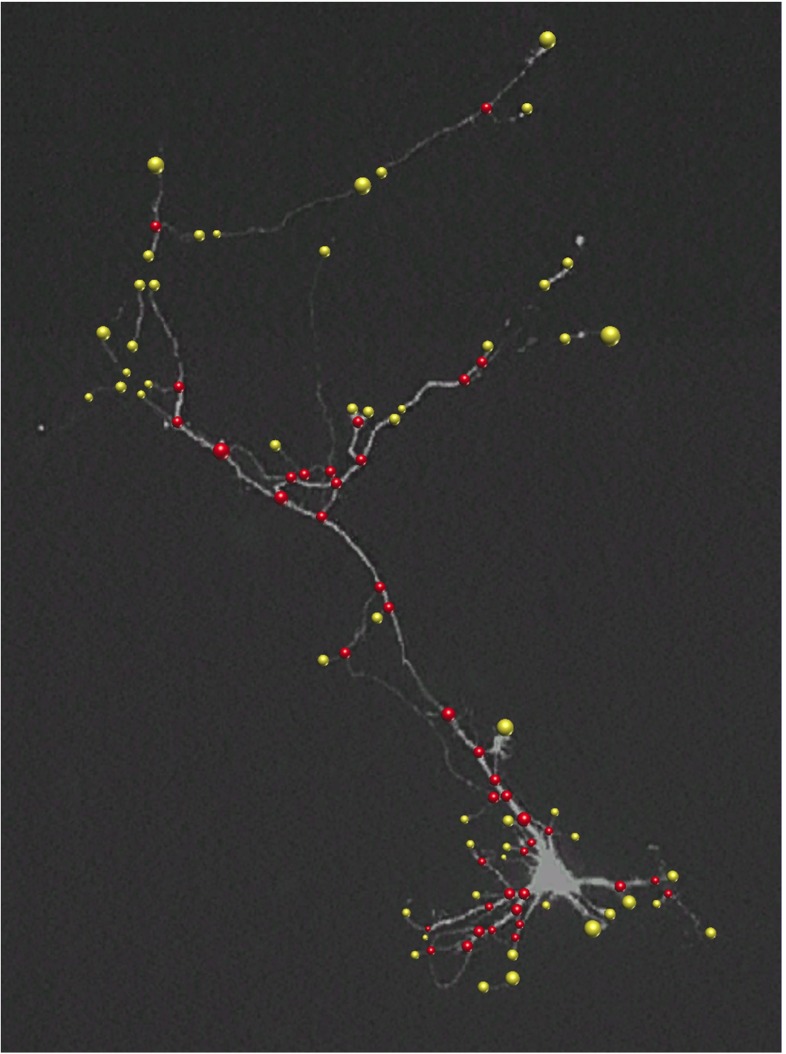

Fig. 1.

Fluorescence microscopy image of a neuron with manually indicated junctions (red circles) and terminations (yellow circles). The radius of each annotated critical-point region reflects the size of the underlying image structure

Here we present a novel method – which we coin Neuron Pinpointer (NP) – for fully automatic detection and characterisation of critical points in fluorescence microscopy images of neurons. We describe and evaluate the method for studies where single (cultured) neurons are imaged in 2D although all aspects of the method can in principle be extended to 3D. The method may also be useful for reconstruction approaches based on 2D projections (Zhou et al. 2015). For computational efficiency the method starts with an initial data reduction step, based on local variation analysis, by which obvious background image regions are excluded. In the remaining set of foreground regions the method then explores the local neighborhood of each image pixel and calculates the response to a set of directional filters. Next, an iterative optimization scheme is used for robust peak selection in the resulting angular profile, and a set of corresponding features relevant for the detection task is computed. The feature set is then further processed to make a nonlinear decision on the presence of a critical point and its type (termination or junction) at each foreground image pixel. To conveniently deal with ambiguity and uncertainty in the data, the decision-making is carried out by a fuzzy-logic rule-based system using predefined rules specifically designed for this task. The presented work aims to facilitate the task of automatic neuron reconstruction by contributing a general scheme for extracting critical points that can serve as useful input for any tracing algorithm.

This paper is a considerably extended version of our recent conference report (Radojević et al. 2014). We have modified the filtering algorithms and fuzzy-logic rules so as to be able to detect both junction and termination points. In addition we here present the full details of our method and an extensive evaluation based on both manually annotated real neuron images and computer generated neuron images. To obtain the latter we here propose a new computational approach based on publicly available expert manual tracings. We start with a brief overview of related work on critical-point detection (“Related Work”) and then present the underlying concepts (“Proposed Method”), implementational details (“Implementational Details”), and experimental evaluation (“Experimental Results”) of our method, followed by a summary of the conclusions that can be derived from the results (“Conclusions”).

Related Work

Detecting topologically critical points in images has been a long-standing problem in many areas of computer vision. Although an in-depth review of the problem and proposed solutions is outside the scope of this paper, we provide a brief discussion in order to put our work into context.

Examples of previous work include the design of filters to find image locations where either three or more edges join (“junctions of edges”) (Sinzinger 2008; Hansen and Neumann 2004; Laganiere and Elias 2004) or three or more lines join (“junctions of lines”) (Yu et al. 1998; Deschênes and Ziou 2000). In biomedical applications, the predominant type of junction is the bifurcation, with occasional trifurcations, as seen in blood vessel trees, bronchial trees, gland ductal trees, and also in dendritic trees (Koene et al. 2009; Iber and Menshykau 2013). Hence, research in this area has focused on finding image locations where three (or more) elongated structures join (Tsai et al. 2004; Agam et al. 2005; Bevilacqua et al. 2005; Bhuiyan et al. 2007; Zhou et al. 2007; Aibinu et al. 2010; Calvo et al. 2011; Obara et al. 2012b; Su et al. 2012; Azzopardi and Petkov 2013).

A common approach to find bifurcation points is to infer them from an initial processing step that aims to segment the elongated structures. However, the way these structures are segmented may influence the subsequent critical-point inference. Popular image segmentation methods use intensity thresholding and/or morphological processing, in particular skeletonization (Hoover et al. 2000; Dima et al. 2002; He et al. 2003; Weaver et al. 2004; Pool et al. 2008; Bevilacqua et al. 2009; Leandro et al. 2009; Aibinu et al. 2010), but these typically produce very fragmented results. Popular methods to enhance elongated image structures prior to segmentation include Hessian based analysis (Frangi et al. 1998; Xiong et al. 2006; Zhang et al. 2007; Al-Kofahi et al. 2008; Yuan et al. 2009; Türetken et al. 2011; Myatt et al. 2012; Basu et al. 2013; Santamaría-Pang et al. 2015), Laplacean-of-Gaussian filters (Chothani et al. 2011), Gabor filters (Bhuiyan et al. 2007; Azzopardi and Petkov 2013), phase congruency analysis (Obara et al. 2012a), and curvelet based image filtering approaches (Narayanaswamy et al. 2011). However, being tailored to elongated structures, such filters often yield a less optimal response precisely at the bifurcation points, where the local image structure is more complex than a single ridge.

Several concepts have been proposed to explicitly detect bifurcation points in the images without relying on an initial segmentation of the axonal and dendritic trees. Examples include the usage of circular statistics of phase information (Obara et al. 2012b), steerable wavelet based local symmetry detection (Püspöki et al. 2013), or combining eigen analysis of the Hessian and correlation matrix (Su et al. 2012). The problem with existing methods is that they often focus on only one particular type of critical point (for example bifurcations but not terminations), or they use rather rigid geometrical models (for example assuming symmetry), while in practice there are many degrees of freedom (Michaelis and Sommer 1994). Image filtering methods for bifurcation detection have also been combined with supervised machine-learning based approaches such as support vector machines (Türetken et al. 2011), artificial neural networks (Bevilacqua et al. 2009), or with multiple classifiers using AdaBoost (Zhou et al. 2007), but these lack flexibility in that they require a training stage for each application.

Robust neuron tracing requires knowledge of not only the bifurcation points but also the termination points. Since each type of critical point may vary considerably in terms of geometry (orientation and diameter of the branches) and image intensity (often related to the branch diameter), designing or training a dedicated filter for each possible case is impractical, and a more integrated approach is highly desirable for both detection and characterization of the different types of critical points. To the best of our knowledge, no generic methods currently exist for critical-point detection in neuron images. The method proposed in this paper aims to fill this gap and to complement exploratory neuron reconstruction algorithms that initialize on a set of seed points.

Proposed Method

Our proposed method for detection and characterization of critical points consists of three steps: feature extraction (“Feature Extraction”), fuzzy-logic based mapping (“Fuzzy-Logic Based Mapping”), and, finally, critical-point determination (“Critical-Point Determination”). Here we describe each step in detail.

Feature Extraction

The aim of the feature extraction step is to compute a set of quantitative features of the local image structure at each pixel position that helps to discriminate between different types of critical points. Since the tree-like neuronal image structures typically cover only a small portion of the image, we avoid unnecessary computations by first performing a foreground selection step (“Foreground Selection”), which discards image locations that are very unlikely to contain neuronal structures and keeps only those regions that are worthy of further examination. Next, the angular profile (“Angular Profile Analysis”) of each foreground pixel is constructed, from which the quantitative features are computed.

Foreground Selection

To determine whether a pixel location (x, y) in a given image I should be considered as foreground or background, we analyze the local intensity variation ρ(x, y) within a circular neighborhood of radius rd centered at that location. To avoid making strong assumptions about the local intensity distribution we chose to use the difference between the 95th and the 5th percentile of the intensities within the neighborhood as the measure of variation:

| 1 |

| 2 |

| 3 |

where W and H denote, respectively, the width and the height of I in pixels. The value of ρ is relatively low within more or less homogeneous regions (background but also the soma) but relatively high in regions containing neuronal branches. Consequently, the histogram of ρ computed over the entire image contains two clusters (representing foreground and background pixels), which can be separated using simple percentile thresholding (Doyle 1962). The percentile should be chosen such that background pixels (true negatives) are removed as much as possible while at the same time the foreground pixels (true positives) are retained as much as possible (in practice this implies allowing for false positives). We found that in our applications a percentile of around 75 is a safe threshold (Fig. 2). Small gaps in the foreground region are closed by morphological dilation. The resulting set of foreground pixel locations is denoted by F. In our applications the parameter rd is typically set to the diameter of the axonal and dendritic structures observed in the image.

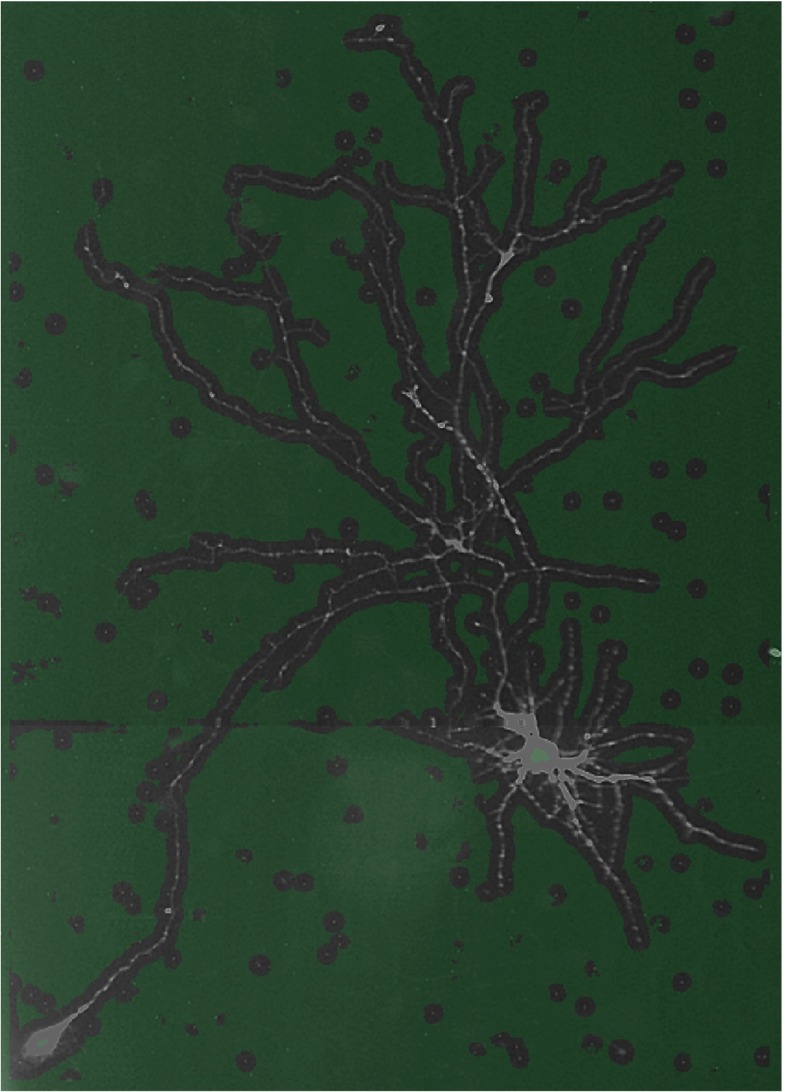

Fig. 2.

Example of foreground selection. The original image of 560×780 pixels is divided into background (green transparent mask) and foreground (gray-scale regions without mask) using r d = 8 pixels and the 75th variation percentile as threshold. In this example, 25% of the total number of pixels is selected for further processing

Angular Profile Analysis

For each selected foreground location, a local angular profile is computed and analyzed. The key task here is to assess the presence and properties of any curvilinear image structures passing through the given location. To this end we correlate the image with a set of oriented kernels distributed evenly over a range of angles around that location (Radojević et al. 2014). The basic kernel used for this purpose is of size D×D pixels and has a constant profile in one direction and a Gaussian profile in the orthogonal direction (Fig. 3):

| 4 |

where S is a normalization factor such that the sum of G(x, y) over all kernel pixels is unity. We chose the Gaussian both because we observed that the cross-sectional profile of axons and dendrites in our applications is approximately Gaussian-like and because the Gaussian is a theoretically well-justified filter for regularization purposes. The parameters D and σD determine the size and shape of the kernel profile and should correspond to the expected branch diameter.

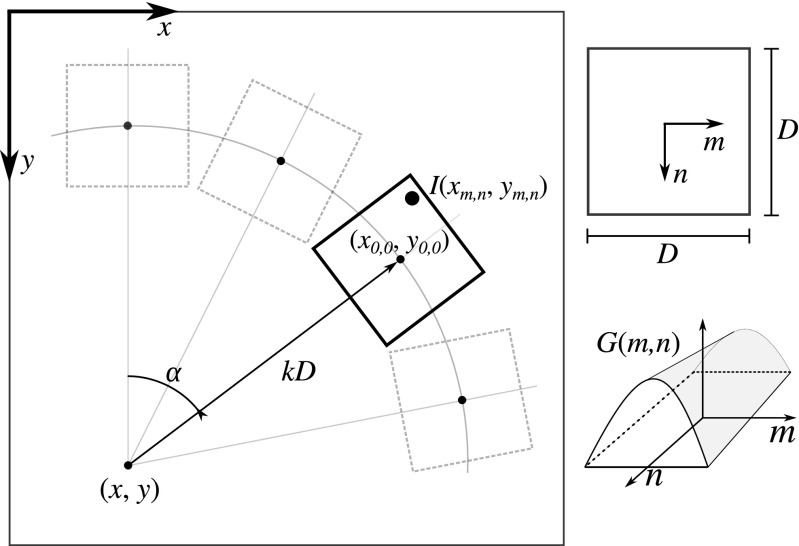

Fig. 3.

Geometry involved in the computation of the angular profile. In effect, the value of p(x, y, α, k, D) is the correlation of the image I(x, y) with the kernel G(m, n) of size D×D pixels, after rotating the kernel patch over angle α and shifting it over k D with respect to (x, y)

Using the kernel we compute the local angular profile at any pixel location (x, y) in the given image I as:

| 5 |

where the transformed image coordinates are obtained as:

| 6 |

and the summation is performed over all (m, n) for which the kernel is defined. That is, p(x, y, α, k, D) is the correlation of the image with the kernel patch rotated over angle α and shifted over a distance kD with respect to (x, y) in the direction corresponding to that angle (Fig. 3). In practice, p is calculated for a discrete set of angles, αi = i/(2πNα), i = 0,…, Nα−1, where Nα is automatically set such that the circle with radius kD is sampled with pixel resolution. The parameter k is typically set slightly larger than 0.5 so as to scan the neighborhood around the considered pixel (x, y). To obtain the image intensity at non-integer transformed locations (xm, n, ym, n), linear interpolation is used.

In contrast with previous works, which used differential kernels for directional filtering and profiling (Yu et al. 1998; Can et al. 1999; Zhang et al. 2007), we employ the matched kernel (4), which avoids noise amplification. Although applying a set of rotated kernels is computationally more demanding than Hessian or steerable filtering based methods, it provides more geometrical flexibility in matching the kernels with the structures of interest while retaining excellent directional sensitivity. In our framework, the computational burden is drastically reduced by the foreground selection step, and further reduction is possible since the filtering process is highly parallelizable.

After computing the angular profile we further process it in order to extract several features (Fig. 4) relevant for critical-point detection and characterization:

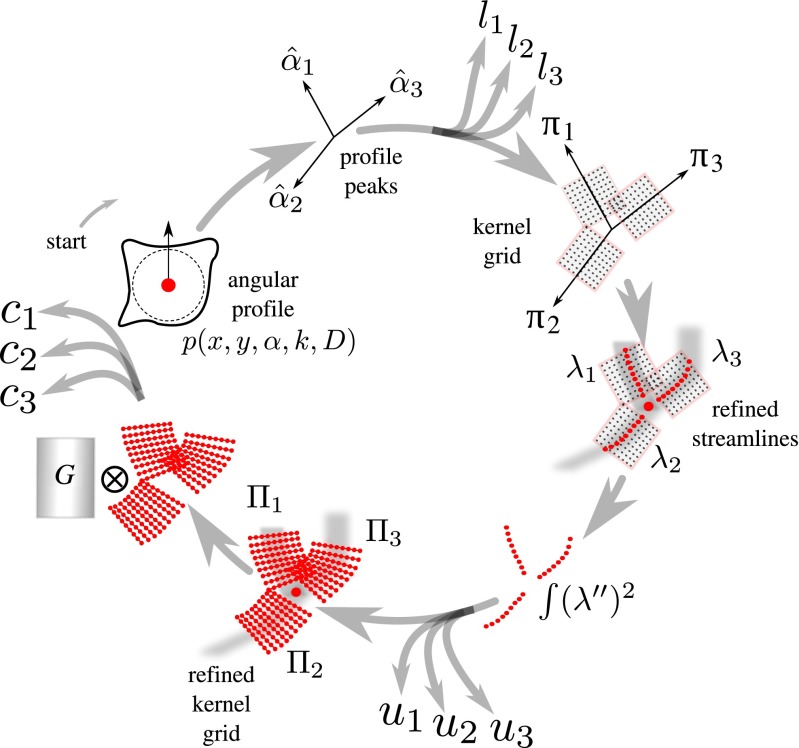

Fig. 4.

Flowchart of the feature extraction scheme. The example showcases a bifurcation but the same scheme is used also for terminations. The scheme, which starts with the angular profile p(x, y, α, k, D) and is executed clockwise, is applied to each pixel in the selected foreground regions and results in the set of features l i, u i, and c i, where i indexes the streamlines. See main text for details

Peaks

At each foreground pixel location we first determine how many and in which direction line-like image structures pass through it. This is done by finding the local maxima (“peaks”) in the angular profile at that location. Since the oriented kernels act as low-pass filters, the profile is sufficiently smooth to extract the peaks reliably using the iterative line searching algorithm (Flannery et al. 1992). The found peaks correspond to angles , in which directions the image intensities are the highest. Here to accommodate terminations, normal body points, and junctions (bifurcations and crossovers).

Likelihood

For each we calculate a likelihood li∈[0,1] from the angular profile according to:

| 7 |

where pmin and pmax denote, respectively, the minimum and maximum of p(x, y, α, k, D) over α.

Energy

Next we consider the local grid for each (Fig. 4), consisting of the transformed coordinates (xm, n, ym, n) corresponding to (6), and we extract a refined centerline point set λi (or “streamline”) on this grid by finding for each n the local maximum over m:

| 8 |

| 9 |

We quantify how much the streamline deviates from a straight line by estimating its bending energy ui≥0 as:

| 10 |

where Δm is the pixel spacing in the direction of m and the summation extends over all n for which the summand can be evaluated. This calculation is a discrete approximation of the integral squared second-order derivative of the centerline function if it were continuously defined.

Correlation

Given a streamline λi we estimate the orthogonal direction at each point in the set by averaging the orthogonal directions of the two neighboring streamline segments corresponding to that point (that is, from the point to the next point, and from the point to the previous point). Using these direction estimates we sample a refined local grid  , consisting of image coordinates relative to the streamline (Fig. 4), and compute a normalized cross-correlation (Lewis 1995) score ci∈[−1,1] as:

, consisting of image coordinates relative to the streamline (Fig. 4), and compute a normalized cross-correlation (Lewis 1995) score ci∈[−1,1] as:

| 11 |

where, similar to the angular profile calculation (5), the summations extend over all (m, n) for which the kernel is defined, and and denote the mean of the image intensities and of the kernel values, respectively. Effectively ci quantifies the degree to which the template G matches a straightened version of the streamline. To cover a range of possible scales (radii of the underlying image structures), we take the largest score of a set of templates with standard deviations of the Gaussian profile model (Su et al. 2012) covering set of values measured in pixels.

Fuzzy-Logic Based Mapping

The feature values extracted at each foreground image location subsequently need to be processed in order to assess the presence of a critical point and its type. Recognizing that in practice everything is “a matter of degree” (Zadeh 1975), and allowing for nonlinear input-output mappings, we chose to use fuzzy logic for this purpose. Fuzzy logic has been successfully used in many areas of engineering (Mendel 1995) but to the best of our knowlege has not been explored for neuron critical-point analysis. We briefly describe the basics of fuzzy logic (“Basics of Fuzzy Logic”) and then present our specific fuzzy-logic system for calculating critical-point maps of neuron images (“Termination and Junction Mapping”).

Basics of Fuzzy Logic

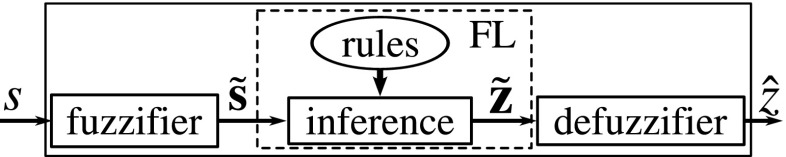

In a fuzzy-logic system (Fig. 5), numerical inputs are first expressed in linguistic terms (the fuzzification step), and are then processed based on predefined rules to produce linguistic outputs (the inference step), which are finally turned back into numerical values (the defuzzification step).

Fig. 5.

Scheme of a single input/output fuzzy-logic (FL) system. A scalar input value s is converted to a vector containing the memberships of s for each of the input fuzzy sets, resulting in a vector containing the memberships of z for each of the output fuzzy sets

Fuzzification

Given an input scalar value , the fuzzification step results in a vector whose elements express the degree of membership of s to input fuzzy sets, each corresponding to a linguistic term describing s. A fuzzy set is defined by a membership function quantifying the degree to which s can be described by the corresponding linguistic term. Commonly used membership functions are trapezoidal, Gaussian, triangular, and piecewise linear (Mendel 1995). As an example, we may have linguistic terms LOW and HIGH, representing the subjective notions “low” and “high”, respectively. The degrees to which “s is low” (which in this paper we will write as s = LOW) and “s is high” (s = HIGH) are given by membership values μLOW(s) and μHIGH(s), respectively. The output of the fuzzification step thus becomes .

Inference

The input fuzzy set memberships are processed by the inference engine to produce a fuzzy output based on rules expressing expert knowledge. The rules can be either explicitly defined or implicitly learned by some training process, and may express nonlinear input-output relationships and involve multiple inputs. In engineering applications, the rules are commonly given as IF-THEN statements about the input and output linguistic terms. For example, the output terms could be OFF, NONE, and ON, indicating whether a certain property of interest is “off”, “none” (expressing ambiguity), or “on”. A rule could then be:

| 12 |

where is the variable over the output range. This is not a binary logical statement, where the input and output conditions can be only true or false, but a fuzzy logical statement, where the conditions are expressed in terms of memberships, in this case μHIGH(s1), μLOW(s2), and μOFF(z). Input conditions are often combined using the operators ∧ (denoting fuzzy intersection) or ∨ (denoting fuzzy union), which are commonly defined as, respectively, the minimum and maximum of the arguments (Mendel 1995). In our example, the IF-part of Ri (12) would result in the following intermediate value (degree of verity):

| 13 |

This value is then used to constrain the fuzzy set corresponding to the output linguistic term addressed by Ri, in this case OFF, resulting in the output fuzzy set:

| 14 |

In practice there may be many rules Ri, i = 1,…, NR, which are aggregated by the inference engine to produce a single output fuzzy set Υ. The common way to do this (Mendel 1995) is by means of a weighted fuzzy union:

| 15 |

Although it is possible to assign a different weight to each rule by setting wi∈[0,1], in our applications this is not critical, and therefore we simply use wi = 1 for all i.

Defuzzification

In the final step of the fuzzy-logic system, the fuzzy output Υ is converted back to a scalar output value. Although there are many ways to do this, a common choice is to calculate the centroid (Mendel 1995):

|

16 |

With this value we can finally calculate the vector of output fuzzy set memberships: .

Termination and Junction Mapping

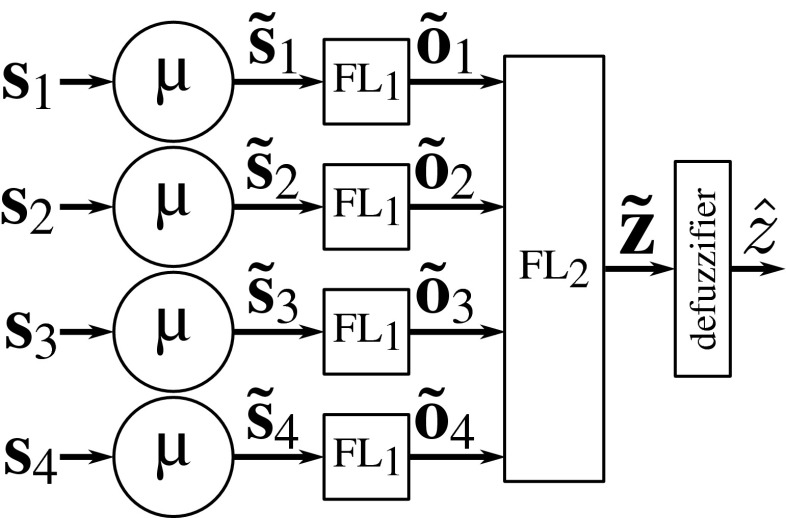

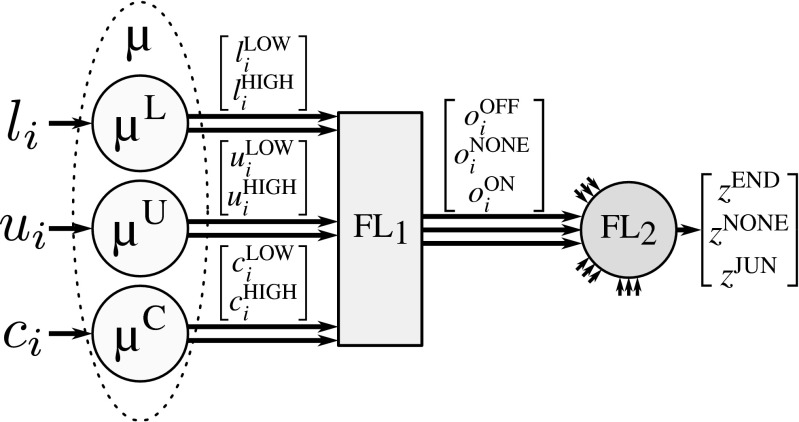

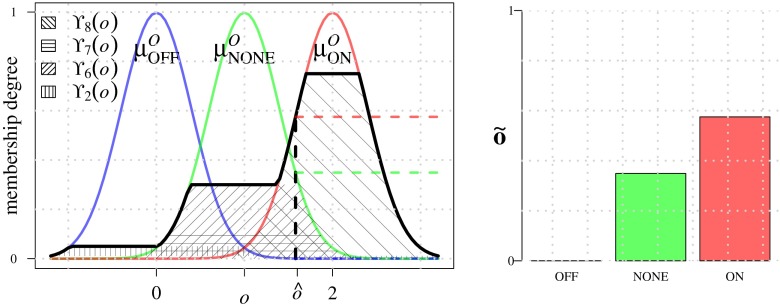

To determine the presence and type of critical point at any foreground image location, we use a cascade of two fuzzy-logic systems, representing two decision levels (Fig. 6). The first level takes as input vectors si = [li, ui, ci], i = 1,…,4, which contain the features for each of the streamlines extracted in the angular profile analysis step at the image location under consideration (“Angular Profile Analysis”). For each streamline (Fig. 7), the features are fuzzified (μ) and processed by the first fuzzy-logic module (FL1), which determines the degree to which the streamline indeed represents a line-like image structure (ON), or not (OFF), or whether the image structure is ambiguous (NONE). In cases where less than four streamlines were found by the angular profile analysis step, the feature vectors of the nonexisting streamlines are set to 0. The fuzzy output for all four streamlines together forms the input for the second decision level, where another fuzzy-logic module (FL2) determines the degree to which the image location corresponds to a junction (JUN), or a termination (END), or neither of these (NONE).

Fig. 6.

Architecture of the proposed fuzzy-logic system for critical-point detection. A cascade of two fuzzy-logic modules (FL1 and FL2) is used, where the first determines the degree to which streamlines (up to four) are present at the image location under consideration, and based on this information the second determines the degree to which that location corresponds to the possible types of critical points

Fig. 7.

Architecture of the proposed fuzzy-logic system for processing the information of one streamline. Input feature values are fuzzified into linguistic terms LOW and HIGH, which are translated by the first fuzzy-logic module (FL1) into intermediate linguistic terms OFF, NONE, ON, which are finally translated by the second fuzzy-logic module (FL2) into linguistic terms END, NONE, JUN

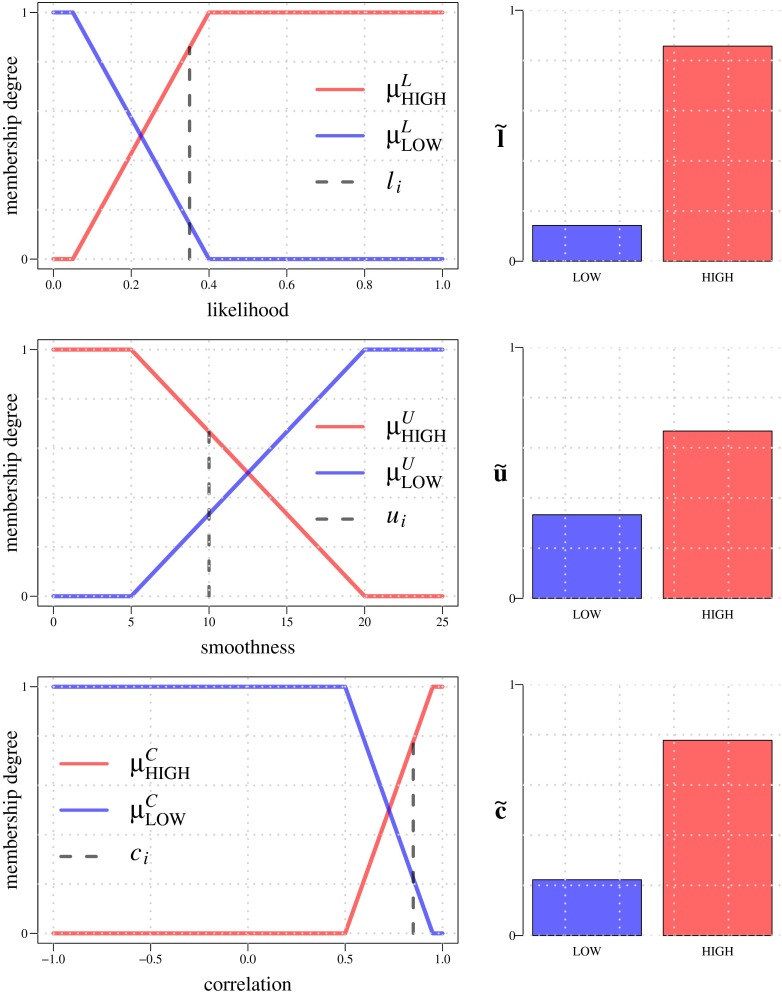

The input streamline features, li, ui, ci, are expressed in linguistic terms LOW and HIGH using membership functions μLOW and μHIGH defined for each type of feature. In our application we use trapezoidal membership functions, each having two inflection points, such that μLOW and μHIGH are each other’s complement (Fig. 8). For example, the degrees to which li = LOW and li = HIGH, are given by and , respectively, and because of this complementarity we often simply write μL to refer to both membership functions (Fig. 7). Similarly, the membership degrees of ui and ci are given by μU and μC, respectively. Summarizing, we use the following notations and definitions for the fuzzification step:

| 17 |

and the lower and higher inflection points of μL are denoted by LLOW and LHIGH, and similarly ULOW and UHIGH for μU, and CLOW and CHIGH for μC (Fig. 8).

Fig. 8.

Input membership functions used in the fuzzification step for FL1. Example LOW and HIGH membership values are shown (right column) for input values (dashed vertical lines in the plots on the left) l i = 0.35 (top row), u i = 10 (middle row), and c i = 0.85 (bottom row). The inflection points of the membership functions are, respectively, L LOW = 0.05 and L HIGH = 0.4 for μ L, U HIGH = 5 and U LOW = 20 for μ U, and C LOW = 0.5 and C HIGH = 0.95 for μ C. Notice that features u i (the centerline bending energies of the streamlines) are reinterpreted here to express the degree of smoothness (hence the inverted membership functions as compared to the other two)

Taken together, the input to FL1 is the matrix of memberships , and the output is the vector of memberships to the linguistic terms OFF, NONE, ON:

| 18 |

To calculate these memberships we introduce scalar variable o, where o = 0 corresponds to OFF, o = 1 to NONE, and o = 2 to ON, and we define Gaussian membership functions , and , centered around 0, 1, and 2, respectively (Fig. 9), and with fixed standard deviation 0.4 so that they sum to about 1 in the interval [0,2]. The rules used by FL1 to associate the input terms LOW and HIGH to the output terms OFF, NONE, and ON, are given in Table 1. They are based on the heuristic assumption that a line-like image structure exists (ON) if the evidence represented by all three features support it (HIGH). By contrast, if the likelihood is LOW and at least one other feature is also LOW, this indicates that no such structure exists (OFF). In all remaining cases, some structure may exist, but it is not line-like (NONE). As an example, rule R8 (Table 1) is given by:

| 19 |

which results in the verity value:

| 20 |

and the output fuzzy set:

| 21 |

All the rules are resolved and combined as:

| 22 |

and centroid defuzzification then results in a scalar output value . This procedure is repeated for each streamline, yielding , from which the output of each FL1 (18) is calculated using the membership functions:

| 23 |

Fig. 9.

Output membership functions used in module FL1. Example output fuzzy sets Υi corresponding to rules R i from Table 1 are shown as the textured areas. Value (left panel) represents the centroid of the aggregated output fuzzy sets. The resulting output membership values (right panel) serve as input for module FL2

Table 1.

The set of rules employed by FL1

| R i | l | u | c | o |

|---|---|---|---|---|

| 1 | LOW | LOW | LOW | OFF |

| 2 | LOW | LOW | HIGH | OFF |

| 3 | LOW | HIGH | LOW | OFF |

| 4 | LOW | HIGH | HIGH | NONE |

| 5 | HIGH | LOW | LOW | NONE |

| 6 | HIGH | LOW | HIGH | NONE |

| 7 | HIGH | HIGH | LOW | NONE |

| 8 | HIGH | HIGH | HIGH | ON |

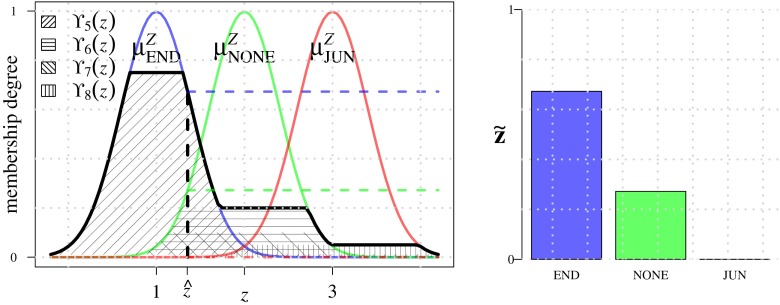

Moving on to the next level, the input to FL2 is the matrix of memberships , and the output is the vector of memberships to the linguistic terms END (termination), NONE (no critical point), JUN (junction):

| 24 |

To calculate these memberships we introduce scalar variable z, where z = 1 corresponds to END, z = 2 to NONE, and z = 3 to JUN, and we define corresponding Gaussian membership functions , , and , centered around 1, 2, and 3, respectively, and with fixed standard deviation 0.4 as before (Fig. 10). The rules used by FL2 to associate the input terms OFF, NONE, ON to the output terms END, NONE, JUN are given in Table 2. They are based on the heuristic assumption that there is a termination (END) if a single streamline is confirmed to correspond to a line-like image structure (ON) and the other three are confirmed to not correspond to such a structure (OFF). Conversely, if at least three are ON, there must be a junction at that location. Finally, if two are ON and two are OFF, or if at least two streamlines are ambiguous (NONE), we assume there is no critical point. Similar to FL1, all the rules of FL2 are evaluated and their results combined as:

| 25 |

which, after centroid defuzzification, results in a scalar output value , from which the output of FL2 (24) is calculated using the membership functions:

| 26 |

The proposed fuzzy-logic system is applied to each foreground pixel location (x, y)∈F (“Foreground Selection”) so that all memberships introduced above may be indexed by (x, y). Based on this we calculate the following two maps:

| 27 |

| 28 |

which indicate the degree to which any pixel (x, y) belongs to a termination or a junction, respectively.

Fig. 10.

Output membership functions used in module FL2. Example output fuzzy sets Υi corresponding to rules R i from Table 2 are shown as the textured areas. Value (left panel) represents the centroid of the aggregated output fuzzy sets. The resulting output membership values (right panel) indicate the degree to which there may be a termination (END), junction (JUN), or neither of these (NONE) at the image pixel location under consideration

Table 2.

The set of rules employed by FL2. Empty entries indicate “don’t care” (could be OFF, NONE, or ON)

| R i | o 1 | o 2 | o 3 | o 4 | z |

|---|---|---|---|---|---|

| 1 | OFF | OFF | OFF | OFF | NONE |

| 2 | OFF | OFF | OFF | ON | END |

| 3 | OFF | OFF | ON | OFF | END |

| 4 | OFF | OFF | ON | ON | NONE |

| 5 | OFF | ON | OFF | OFF | END |

| 6 | OFF | ON | OFF | ON | NONE |

| 7 | OFF | ON | ON | OFF | NONE |

| 8 | OFF | ON | ON | ON | JUN |

| 9 | ON | OFF | OFF | OFF | END |

| 10 | ON | OFF | OFF | ON | NONE |

| 11 | ON | OFF | ON | OFF | NONE |

| 12 | ON | OFF | ON | ON | JUN |

| 13 | ON | ON | OFF | OFF | NONE |

| 14 | ON | ON | OFF | ON | JUN |

| 15 | ON | ON | ON | OFF | JUN |

| 16 | ON | ON | ON | ON | JUN |

| 17 | NONE | NONE | NONE | ||

| 18 | NONE | NONE | NONE | ||

| 19 | NONE | NONE | NONE | ||

| 20 | NONE | NONE | NONE | ||

| 21 | NONE | NONE | NONE | ||

| 22 | NONE | NONE | NONE |

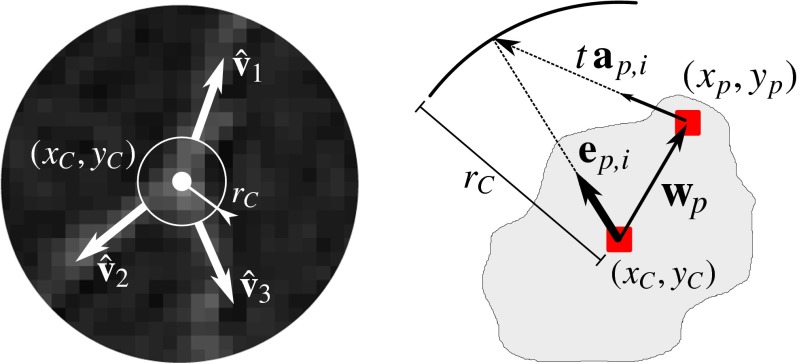

Critical-Point Determination

The ultimate aim of our method is to provide a list of critical points in the neuron image, with each point fully characterized in terms of type, location, size, and main branch direction(s). Since each critical point of a neuronal tree typically covers multiple neighboring pixels in the image, giving rise to a high value at the corresponding pixels in the maps IEND and IJUN, the final task is to segment the maps and to aggregate the information within each segmented region.

Due to noise, labeling imperfections, and structural ambiguities in the original image, the values of neighboring pixels in the maps may vary considerably, and direct thresholding usually does not give satisfactory results. To improve the robustness we first regularize the real-valued scores in the maps by means of local-average filtering with a radius of 3-5 pixels. Next, max-entropy based automatic thresholding (Kapur et al. 1985) is applied to segment the maps, as in contrast with many other thresholding methods we found it to perform well in separating the large but relatively flat (low information) background regions from the much smaller but more fluctuating (high information) regions of interest. The resulting binary images are further processed using a standard connected components algorithm (Sonka et al. 2007) to identify the critical-point regions.

Each critical region consists of a set of connected pixels xp = (xp, yp), p = 1,…, Np, where Np denotes the number of pixels in the region. From these, the representative critical-point location xC = (xC, yC) is calculated as:

| 29 |

while the critical-point size is represented by the radius of the minimum circle surrounding the region:

| 30 |

where wp = xp−xC (Fig. 11). To obtain regularized estimates of the main branch directions for the critical point, we aggregate the directions corresponding to the angular profile peaks (“Angular Profile Analysis”) of all the xp in the region as follows. For each xp we have angular profile peak direction vectors . Each of these vectors defines a line a(t) = xp + tap, i parameterized by . We establish the projection of this line onto the circle by substituting x = a(t) and solving for t. From this we calculate the contributing unit vector (Fig. 11):

| 31 |

which points from xC to the intersection point. This is done for all p = 1,…, Np in the region and for each p, resulting in the set of vectors {ep, i}. Next, a recursive mean-shift clustering algorithm (Cheng 1995) is applied to {ep, i}, which converges to a set , where the cluster vectors , i = 1,…, L, represent the branches. For a critical region in IEND, we need only one main branch direction, which we simply take to be the direction to which the largest number of ep, i were shifted. For a critical region in IJUN, we take as the main branch directions the (at least three) to which the largest number of ep, i were shifted. These calculations are performed for all critical regions.

Fig. 11.

Critical-point determination. A critical point is characterized by its type, centroid location (x C, y C), radius r C, and its main branch directions (left panel, in this case a bifurcation), aggregated from the pixels (x p, y p) in the corresponding critical region (right panel)

Implementational Details

The method was implemented in the Java programming language as a plugin for the image processing and analysis tool ImageJ (Abràmoff et al. 2004; Schneider et al. 2012). Since the feature extraction step (“Feature Extraction”), in particular the matched filtering for angular profile analysis, is quite computationally demanding, we applied parallelization in multiple ways to reduce the running time to acceptable levels (on the order of minutes on a regular PC). Specifically, the directional filtering was split between CPU cores, each taking care of a subset of the directions (depending on the number of available cores). After this, the angular profile analysis and calculation of the features was also split, with each core processing a subset of the foreground image locations. This was sufficient for our experiments. Further improvement in running time (down to real-time if needed) could be achieved by mass parallelization using GPUs (graphical processing units) instead of CPUs.

Essential parameters that need to be set by the user are the scale parameters k and D (“Angular Profile Analysis”) and the inflection points LLOW, LHIGH, ULOW, UHIGH, CLOW, and CHIGH of the input membership functions used by fuzzy-logic module FL1 (“??”). In our applications we set D to the expected neuron diameter in a given set of images while k = 0.7 was kept fixed. The L inflection points are always in the range [0,1] since the corresponding feature (likelihood) is normalized. Based on ample experience with many data sets we typically set LLOW close to 0 and LHIGH around 0.5 (Fig. 8). By contrast, the inflection points U correspond to a feature (centerline bending energy) that is not normalized and may vary widely from 0 to any positive value. To obtain sensible values for these we rely on the histogram of all calculated energy values in the image. Parameter ULOW is set to the threshold computed by the well-known triangle algorithm, while typically UHIGH≫ULOW. We note that the membership functions defined by these parameters are inverted (Fig. 8) such that the energy becomes a measure of smoothness. Finally, the C inflection points correspond again to a feature (correlation) with a fixed output range [−1,1]. In our applications we usually set them to CLOW∈[0.1,0.5] and CHIGH = 0.95 (Fig. 8).

All other aspects of our method that could be considered as user parameters either follow directly from these essential parameters or are fixed to the standard values mentioned in the text. For example, the radius rd of the circular neighborhood in the foreground selection step (“Foreground Selection”) can be set equal to D, and the standard deviation σD of the Gaussian profile (“Angular Profile Analysis”) can be set to D/6 to get a representative shape. Also, the output membership functions of FL1 (input to FL2) as well as the output membership functions of FL2 are Gaussians with fixed levels and standard deviation (“Termination and Junction Mapping”), as they are not essentially influencing the performance of the algorithm.

Experimental Results

To evaluate the performance of our method in correctly detecting and classifying neuronal critical points we performed experiments with simulated images (using the ground truth available from the simulation) as well as with real fluorescence microscopy images (using manual annotation as the gold standard). After describing the performance measures (“Performance Measures”), we present and discuss the results of the evaluation on simulated images, including synthetic triplets (“Evaluation on Simulated Triplet Images”) and neurons (“Evaluation on Simulated Neuron Images”), and on real neuron images (“Evaluation on Real Neuron Images”), as well as the results of a comparison of our method with two other methods (“Comparison With Other Methods”).

Performance Measures

Performance was quantified by counting the correct and incorrect hits and the misses of the detection with respect to the reference data. More specifically, we counted the true-positive (TP), false-positive (FP), and the false-negative (FN) critical-point detections, and we used these to calculate the recall R=TP/(TP+FN) and precision P=TP/(TP+FP). Both R and P take on values in the range from 0 (meaning total failure) to 1 (meaning flawless detection). They are commonly combined in the F-measure (Powers 2011), defined as the harmonic mean of the two: F=2 R P/(R+P). The F-measure was computed separately for each type of critical points considered in this paper, yielding FEND for terminations and FJUN for junctions. As a measure of overall performance we also computed the harmonic mean of the two F-measures: FBOTH = 2 FEND FJUN/(FEND+FJUN).

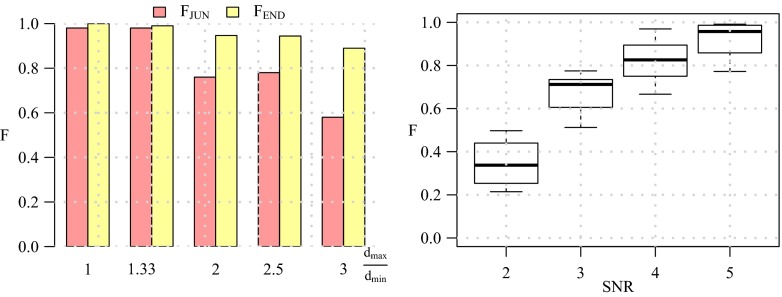

Evaluation on Simulated Triplet Images

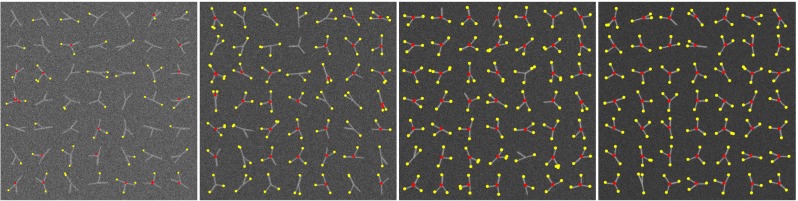

Before considering full neuron images we first evaluated the performance of our method in detecting terminations and junctions in a very basic configuration as a function of image quality. To this end we used a triplet model, consisting of a single junction modeling a bifurcation, having three branches with arbitrary orientations (angular intervals) and diameters (Fig. 12). Orientations were randomly sampled from a uniform distribution in the range [0,2π] while prohibiting branch overlap. Since in principle the directional filtering step (“Angular Profile Analysis”) uses a fixed kernel size D, we wanted to investigate the robustness of the detection for varying ratios dmax/dmin between the maximum and the minimum branch diameter in a triplet. For this experiment we considered ratios 1,0.33,2,2.5,3 by taking normalized diameter configurations (d1, d2, d3) = (0.33,0.33,0.33), (0.3,0.3, 0.4), (0.2, 0.4,0.4), (0.2,0.3,0.5), (0.2,0.2,0.6), where in each case the actual smallest diameter was set to 3 pixels (the resolution limit) and the other diameters were scaled accordingly. For each configuration we simulated images with 1,000 well-separated triplets for signal-to-noise ratio levels SNR=2, 3, 4, 5 (see cropped examples in Fig. 12). We chose these levels knowing that SNR=4 is a critical level in other detection problems (Smal et al. 2010; Chenouard et al. 2014). Poisson noise was used in simulating fluorescence microscopy imaging of the triplets. From the results of this experiment (Fig. 13) we conclude that our method is very robust for diameter ratios and an image quality of SNR≥4. We also conclude that our method is somewhat better in detecting terminations than detecting junctions. Example detection results for dmax/dmin≤2 for the considered SNR levels are shown in Fig. 12.

Fig. 12.

Examples of simulated triplet images and detection results. Each triplet consists of three branches with different diameters which join at one end to form a bifurcation point and with the other ends being termination points. Images were generated at SNR=2,3,4,5 (left to right panel). The detection results with our method are indicated as red circles (bifurcation points) and yellow circles (termination points), where the radius of each circle reflects the size of the critical region found by our method

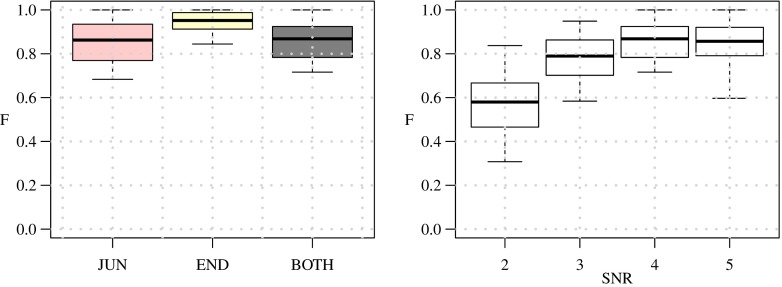

Fig. 13.

Performance of our method in detecting terminations and junctions in simulated images of triplets. The values of FEND and FJUN are shown (left panel) for the various branch diameter ratios at SNR=4. The distribution of FBOTH values is shown as a box plot (right panel) for the various SNR levels

Evaluation on Simulated Neuron Images

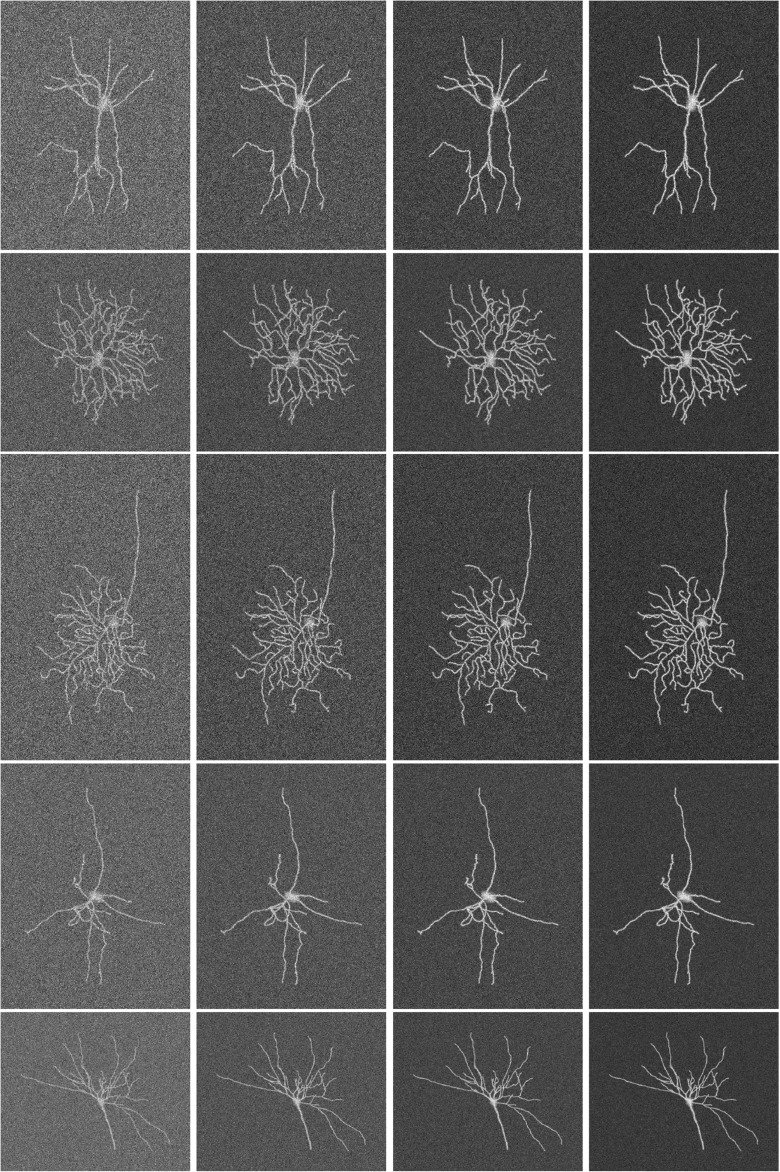

To evaluate our method on more complex images, but for which we would still know the truth exactly, we simulated the imaging of complete neurons. Although there are various ways this could be done (Koene et al. 2009; Vasilkoski and Stepanyants 2009), we chose to use existing expert reconstructions from the NeuroMorpho.Org database (Ascoli et al. 2007). A total of 30 reconstructions from different neuron types were downloaded as SWC files (Cannon et al. 1998), in which the reconstructions are represented as a sequence of connected center-point locations in 3D with corresponding radii in micrometers. Fluorescence microscopy images were generated from these reconstructions in 2D by using a Gaussian point-spread function model and Poisson noise to emulate diffraction-limited optics and photon statistics. For each reconstruction we generated images of SNR=2, 3, 4, 5 (Fig. 14). This way we obtained simulated images of neurons for which the termination and junction point locations were known exactly from the SWC files.

Fig. 14.

Examples of simulated neuron images based on expert reconstructions from the NeuroMorpho.Org database. The images show a wide range of morphologies (one type per row) and image qualities of SNR=2, 3, 4, 5 (from left to right per row)

From the evaluation results (Fig. 15) we confirm the conclusion from the experiments on triplets that our method performs well for SNR≥4 and is somewhat better in detecting terminations than detecting junctions. For SNR=4 we find that the performance for junction detection is FJUN≈0.85 while for termination detection FEND≈0.95. The higher performance for termination detection may be explained by the fact that the underlying image structure is usually less ambiguous (a single line-like structure surrounded by darker background) than in the case of junctions (multiple line-like structures that are possibly very close to each other). Example detection results are shown in Fig. 16.

Fig. 15.

Performance of our method in detecting terminations and junctions in 30 simulated images of neurons. The distributions of the FEND, FJUN, and FBOTH values are shown as box plots for SNR=4 (left panel) and in addition the distribution of FBOTH is shown for SNR=2, 3, 4, 5 (right panel)

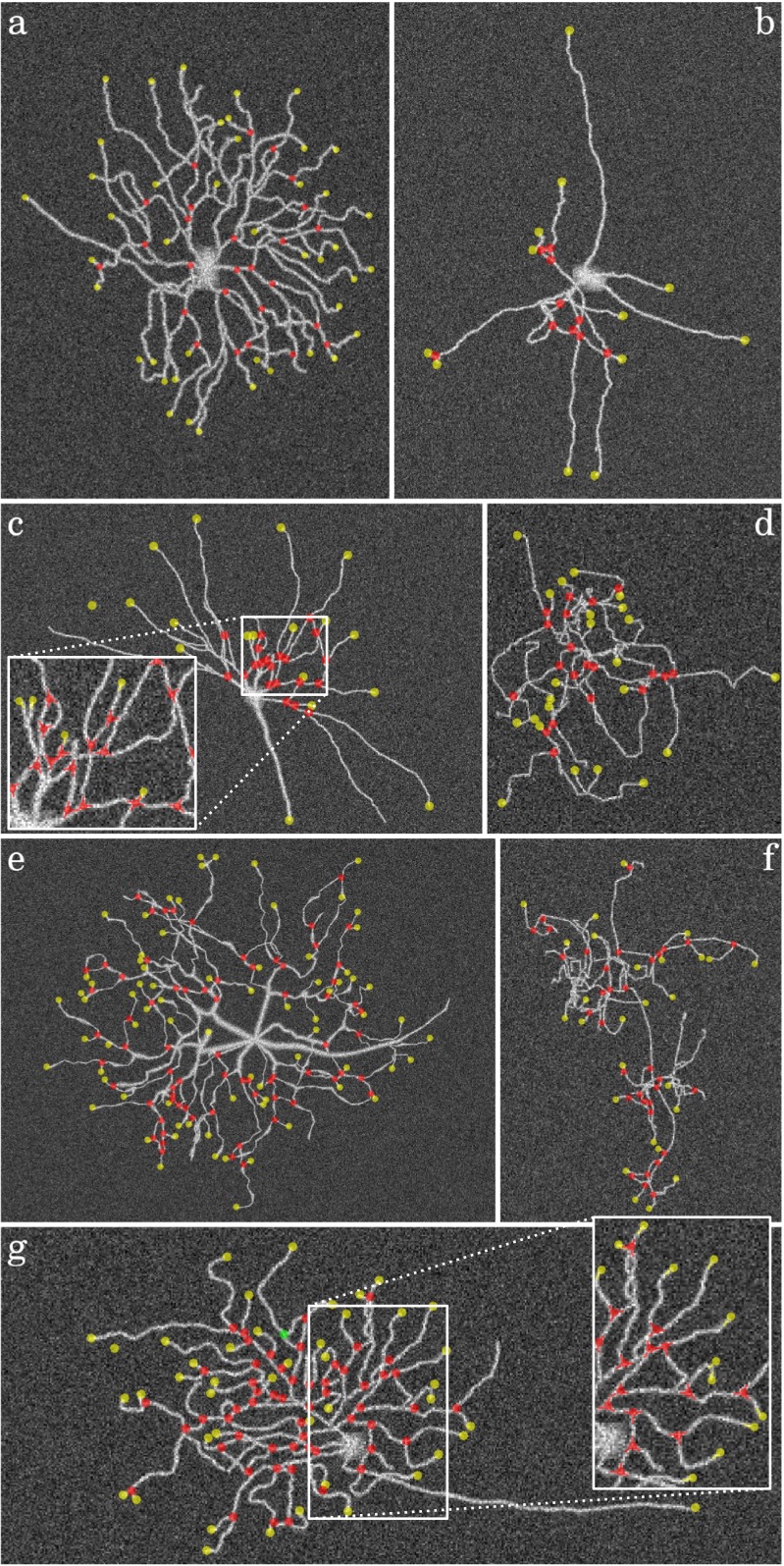

Fig. 16.

Example detection results in simulated neuron images at SNR=4. The images are contrast enhanced and show the detected terminations (yellow circles) and junctions (red circles) as overlays with fixed radius for better visibility. The value of FBOTH in these examples is a 0.69, b 0.85, c 0.85, d 0.77, e 0.75, f 0.68, g 0.86

Evaluation on Real Neuron Images

As the ultimate test case we also evaluated our method on real fluorescence microscopy images of neurons from a published study (Steiner et al. 2002). A total of 30 representative images were taken and expert manual annotations of the critical points were obtained to serve as the gold standard in this experiment. Needless to say, real images are generally more challenging than simulated images, as they contain more ambiguities due to labeling and imaging imperfections, and thus we expected our method to show lower performance. Since in this case we have no control over the SNR in the images we report the detection results of all images together. From the evaluation results (Fig. 17) we find that the median performance in detecting critical points is FJUN = 0.81 for junctions and FEND = 0.73 for terminations while FBOTH = 0.76. As expected, these numbers are lower than those of the simulated neuron images. Surprisingly, we observe that in the real images our method is better in detecting junctions than detecting terminations. A possible explanation for this could be that in the simulated images we used a constant intensity for the neuron branches, as a result of which terminations are as bright as junctions but much less ambiguous due to a clear background, while in the real images the terminations are usually much less clear due to labeling imperfections and the fact that the branch tips tend to be thinner and thus less bright than the junctions. This illustrates the limitations of the simulations. Example detection results are shown in Fig. 18.

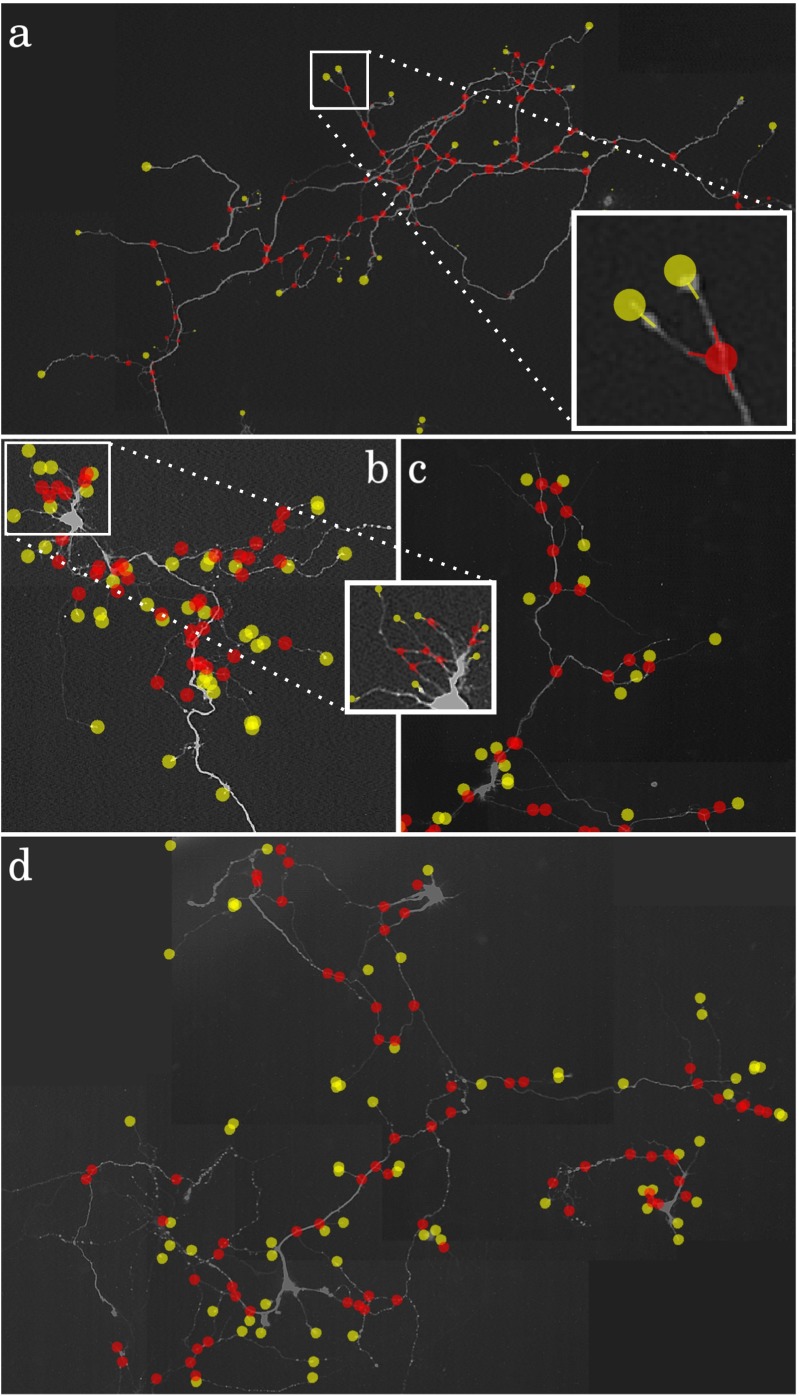

Fig. 17.

Performance of our method in detecting terminations and junctions in 30 real fluorescence microscopy images of neurons. The distributions of the FEND, FJUN, and FBOTH values for all images together are shown as box plots

Fig. 18.

Example detection results for four real neuron images. The images show the detected terminations (yellow circles) and junctions (red circles) as overlays with fixed radius for better visibility. The value of FBOTH in these examples is a 0.82, b 0.78, c 0.68, d 0.65

Comparison With Other Methods

Finally we sought to compare the performance of our Neuron Pinpointer (NP) method with other methods. Since we were not aware of other methods explicitly designed to detect and classify critical points in neuron images before reconstruction, we considered two existing software tools relevant in this context and we compared their implicit detection capabilities with our explicit method. If our method performs better, this would indicate that the existing methods may be improved by exploiting the output of our method.

The first tool, AnalyzeSkeleton (AS) (Arganda-Carreras et al. 2010), available from http://fiji.sc/AnalyzeSkeleton, is an ImageJ plugin for finding and counting all end-points and junctions in a skeleton image. To obtain skeleton images of our neuron images, we used the related skeletonization plugin available from the same developers, http://fiji.sc/Skeletonize3D, which is inspired by an advanced thinning algorithm (Lee et al. 1994). The input for the latter is a binary image obtained by segmentation based on smoothing (to reduce noise) and thresholding. For our experiments we considered a range of smoothing scales and manually selected thresholds as well as automatically determined thresholds using the following algorithms from ImageJ: Intermodes, Li, MaxEntropy, RenyiEntropy, Moments, Otsu, Triangle, and Yen. All of these were tried in combination with the AS method and the highest F-scores were used.

The second tool, All-Path-Prunning (APP2) (Xiao and Peng 2013), is a plugin for Vaa3D (Peng et al. 2010; Peng et al. 2014), available from http://www.vaa3d.org/. It was not designed specifically for a priori critical-point detection but for fully automatic neuron reconstruction. Nevertheless, in producing a tree representation of a neuron, the reconstruction algorithm must somehow identify the branch end-points and junctions, and for our experiments we can easily retrieve them from the SWC output files. In principle, any neuron reconstruction method is also implicitly a critical-point detection method, and we can quantify its performance by comparing the output tree nodes with the reference data. The interesting question is whether an explicit detector such as NP outperforms the implicit detection carried out in a tool such as APP2. We manually adjusted the user parameters of the tool to get optimal performance in our experiments.

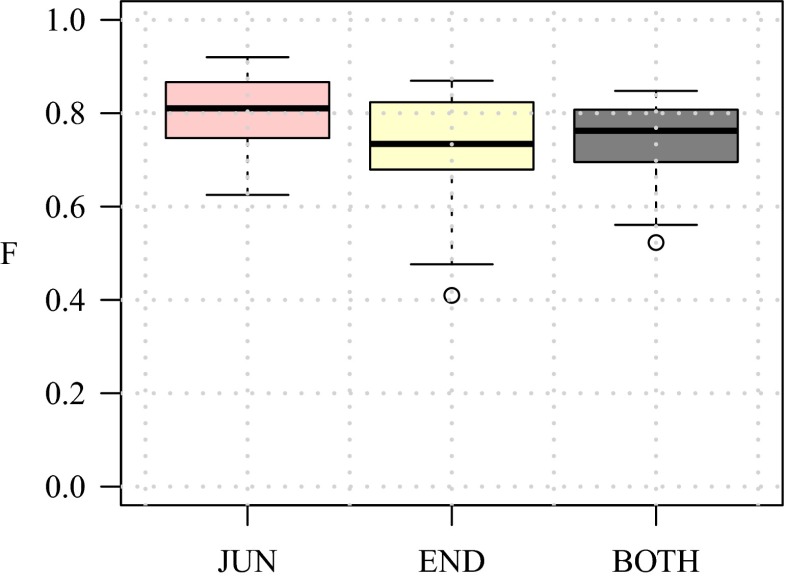

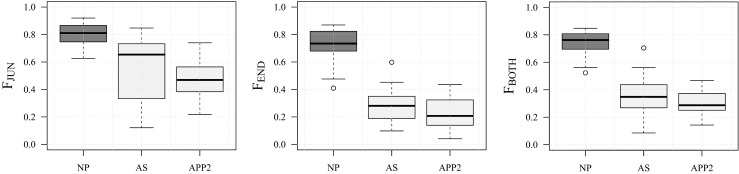

A comparison of the F-scores of NP, AS, and APP2 for the 30 real neuron images used in our experiments is presented in Fig. 19. From the plots we see that the detection rates of our NP method are substantially higher than those of AS and APP2. The difference is especially noticeable for the termination points. More specifically, the difference between FEND and FJUN is relatively small for NP, but much larger for both AS and APP2. This indicates a clear advantage of using our explicit and integrated approach for detecting critical points, as accurate neuron reconstruction requires accurate detection of both junctions and terminations. However, with the current implementation, this advantage does come at a cost: timing of the three methods on a standard PC (with Intel Core i7-2630QM 2GHz CPU and 6 GB total RAM) revealed that with our images of 105 to 106 pixels in size, NP took about 40 seconds per image on average, while both AS and APP2 took only about 1.5 seconds per image. Fortunately, since virtually all the computation time of our method is spent in the directional filtering step, which is highly parallelizable, this cost can be reduced to any desired level by employing many-core hardware (such as GPUs).

Fig. 19.

Critical-point detection performance of our method (NP) compared to two other methods (AS and APP2). The median values of FJUN (left plot) are 0.81 (NP), 0.65 (AS), and 0.47 (APP2). The median values of FEND (middle plot) are 0.73 (NP), 0.28 (AS), and 0.21 (APP2). Finally, the median values of FBOTH (right plot) are 0.76 (NP), 0.35 (AS), and 0.29 (APP2)

Conclusions

We have presented a novel method for solving the important problem of detecting and characterizing critical points in the tree-like structures in neuron microscopy images. Based on a directional filtering and feature extraction algorithm in combination with a two-stage fuzzy-logic based reasoning system, it provides an integrated framework for the simultaneous identification of both terminations and junctions. From the results of experiments on simulated as well as real fluorescence microscopy images of neurons, we conclude that our method achieves substantially higher detection rates than the rates that can be inferred from existing neuron reconstruction methods. This is true for both junction points and termination points, but especially for the latter, which are of key importance in obtaining faithful reconstructions. Altogether, the results suggest that our method may provide important clues to improve the performance of reconstruction methods.

Actual integration of our detection method with existing tracing methods was outside the scope of the present study, but we are currently in the process of developing a new neuron tracing method and, in that context, we aim to perform an extensive evaluation of the beneficial effects of the presented method also on existing tracing methods. For this purpose we also aim to extend our method to 3D, where the exact same workflow could be used, except that the angular profile analysis and the final critical-point determination step would involve two angles (azimuth and elevation) instead of one. Also, it would require mass parallelization of the image filtering step to keep the running times of the method acceptable, but this should be straightforward in view of the highly parallel nature of this step.

Although we focused on neuron analysis in this work, our method may also be potentially useful for other applications involving tree-like image structures, such as blood vessel or bronchial tree analysis, but this requires further exploration. For this purpose it may be helpful to increase the robustness of the detection method to larger branch diameter ratios than tested in this paper. This could be done, for example, by using multiscale filtering approaches, or by selective morphological thinning (or thickening).

Information Sharing Statement

The software implementation of the presented method is available as an ImageJ (RRID:nif-0000-30467) plugin from https://bitbucket.org/miroslavradojevic/npinpoint.

Acknowledgments

This work was funded by the Netherlands Organization for Scientific Reseach (NWO grant 612.001.018 awarded to Erik Meijering).

Contributor Information

Miroslav Radojević, Email: m.radojevic@erasmusmc.nl.

Erik Meijering, Email: h.meijering@erasmusmc.nl.

References

- Abràmoff MD, Magalhães PJ, Ram SJ. Image processing with ImageJ. Biophotonics International. 2004;11(7):36–43. [Google Scholar]

- Agam G, Armato IIISG, Wu C. Vessel tree reconstruction in thoracic CT scans with application to nodule detection. IEEE Transactions on Medical Imaging. 2005;24(4):486–499. doi: 10.1109/TMI.2005.844167. [DOI] [PubMed] [Google Scholar]

- Aibinu AM, Iqbal MI, Shafie AA, Salami MJE, Nilsson M. Vascular intersection detection in retina fundus images using a new hybrid approach. Computers in Biology and Medicine. 2010;40(1):81–89. doi: 10.1016/j.compbiomed.2009.11.004. [DOI] [PubMed] [Google Scholar]

- Al-Kofahi Y, Dowell-Mesfin N, Pace C, Shain W, Turner JN, Roysam B. Improved detection of branching points in algorithms for automated neuron tracing from 3D confocal images. Cytometry Part A. 2008;73(1):36–43. doi: 10.1002/cyto.a.20499. [DOI] [PubMed] [Google Scholar]

- Anderton BH, Callahan L, Coleman P, Davies P, Flood D, Jicha GA, Ohm T, Weaver C. Dendritic changes in Alzheimer’s disease and factors that may underlie these changes. Progress in Neurobiology. 1998;55(6):595–609. doi: 10.1016/S0301-0082(98)00022-7. [DOI] [PubMed] [Google Scholar]

- Arganda-Carreras I, Fernández-González R, Muñoz-Barrutia A, Ortiz-De-Solorzano C. 3D reconstruction of histological sections: application to mammary gland tissue. Microscopy Research and Technique. 2010;73(11):1019–1029. doi: 10.1002/jemt.20829. [DOI] [PubMed] [Google Scholar]

- Ascoli GA. Computational neuroanatomy: Principles and methods. Totowa: Humana Press; 2002. [Google Scholar]

- Ascoli GA, Donohue DE, Halavi M. NeuroMorpho.Org: a central resource for neuronal morphologies. Journal of Neuroscience. 2007;27(35):9247–9251. doi: 10.1523/JNEUROSCI.2055-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Azzopardi G, Petkov N. Automatic detection of vascular bifurcations in segmented retinal images using trainable COSFIRE filters. Pattern Recognition Letters. 2013;34(8):922–933. doi: 10.1016/j.patrec.2012.11.002. [DOI] [Google Scholar]

- Bas E, Erdogmus D. Principal curves as skeletons of tubular objects: locally characterizing the structures of axons. Neuroinformatics. 2011;9(2-3):181–191. doi: 10.1007/s12021-011-9105-2. [DOI] [PubMed] [Google Scholar]

- Basu S, Condron B, Aksel A, Acton S. Segmentation and tracing of single neurons from 3D confocal microscope images. IEEE Journal of Biomedical and Health Informatics. 2013;17(2):319–335. doi: 10.1109/TITB.2012.2209670. [DOI] [PubMed] [Google Scholar]

- Bevilacqua, V., Cambò, S., Cariello, L., & Mastronardi, G. (2005). A combined method to detect retinal fundus features. In Proceedings of the IEEE European conference on emergent aspects in clinical data analysis (pp. 1–6).

- Bevilacqua V, Cariello L, Giannini M, Mastronardi G, Santarcangelo V, Scaramuzzi R, Troccoli A. A comparison between a geometrical and an ANN based method for retinal bifurcation points extraction. Journal of Universal Computer Science. 2009;15(13):2608–2621. [Google Scholar]

- Bhuiyan, A., Nath, B., Chua, J., & Ramamohanarao, K. (2007). Automatic detection of vascular bifurcations and crossovers from color retinal fundus images. In Proceedings of the international ieee conference on signal-image technologies and internet-based system (pp. 711–718).

- Calvo D, Ortega M, Penedo MG, Rouco J. Automatic detection and characterisation of retinal vessel tree bifurcations and crossovers in eye fundus images. Computer Methods and Programs in Biomedicine. 2011;103(1):28–38. doi: 10.1016/j.cmpb.2010.06.002. [DOI] [PubMed] [Google Scholar]

- Can A, Shen H, Turner JN, Tanenbaum HL, Roysam B. Rapid automated tracing and feature extraction from retinal fundus images using direct exploratory algorithms. IEEE Transactions on Information Technology in Biomedicine. 1999;3(2):125–138. doi: 10.1109/4233.767088. [DOI] [PubMed] [Google Scholar]

- Cannon R, Turner D, Pyapali G, Wheal H. An on-line archive of reconstructed hippocampal neurons. Journal of Neuroscience Methods. 1998;84(1):49–54. doi: 10.1016/S0165-0270(98)00091-0. [DOI] [PubMed] [Google Scholar]

- Cheng Y. Mean shift, mode seeking, and clustering. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1995;17(8):790–799. doi: 10.1109/34.400568. [DOI] [Google Scholar]

- Chenouard N, Smal I, de Chaumont F, Maška M, Sbalzarini IF, Gong Y, Cardinale J, Carthel C, Coraluppi S, Winter M, Cohen AR, Godinez WJ, Rohr K, Kalaidzidis Y, Liang L, Duncan J, Shen H, Xu Y, Magnusson KEG, Jaldén J, Blau HM, Paul-Gilloteaux P, Roudot P, Kervrann C, Waharte F, Tinevez JY, Shorte SL, Willemse J, Celler K, van Wezel GP, Dan HW, Tsai YS, Ortiz deSolrzanoC, Olivo-Marin JC, Meijering E. Objective comparison of particle tracking methods. Nature Methods. 2014;11(3):281–289. doi: 10.1038/nmeth.2808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choromanska A, Chang SF, Yuste R. Automatic reconstruction of neural morphologies with multi-scale tracking. Frontiers in Neural Circuits. 2012;6(25):1–13. doi: 10.3389/fncir.2012.00025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chothani P, Mehta V, Stepanyants A. Automated tracing of neurites from light microscopy stacks of images. Neuroinformatics. 2011;9(2-3):263–278. doi: 10.1007/s12021-011-9121-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehmelt, L., Poplawski, G., Hwang, E., & Halpain, S. (2011). NeuriteQuant: an open source toolkit for high content screens of neuronal morphogenesis. BMC Neuroscience, 12, 100. [DOI] [PMC free article] [PubMed]

- Dercksen VJ, Hege HC, Oberlaender M. The Filament Editor: an interactive software environment for visualization, proof-editing and analysis of 3D neuron morphology. Neuroinformatics. 2014;12(2):325–339. doi: 10.1007/s12021-013-9213-2. [DOI] [PubMed] [Google Scholar]

- Deschênes F, Ziou D Detection of line junctions and line terminations using curvilinear features. Pattern Recognition Letters. 2000;21(6):637–649. doi: 10.1016/S0167-8655(00)00032-5. [DOI] [Google Scholar]

- Dima A, Scholz M, Obermayer K. Automatic segmentation and skeletonization of neurons from confocal microscopy images based on the 3-D wavelet transform. IEEE Transactions on Image Processing. 2002;11(7):790–801. doi: 10.1109/TIP.2002.800888. [DOI] [PubMed] [Google Scholar]

- Donohue DE, Ascoli GA. A comparative computer simulation of dendritic morphology. PLoS Computational Biology. 2008;4(6):1–15. doi: 10.1371/journal.pcbi.1000089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donohue DE, Ascoli GA. Automated reconstruction of neuronal morphology: An overview. Brain Research Reviews. 2011;67(1):94–102. doi: 10.1016/j.brainresrev.2010.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doyle W. Operations useful for similarity-invariant pattern recognition. Journal of the ACM. 1962;9(2):259–267. doi: 10.1145/321119.321123. [DOI] [Google Scholar]

- Flannery BP, Press WH, Teukolsky SA, Vetterling W. Numerical Recipes in C. New York: Cambridge University Press; 1992. [Google Scholar]

- Frangi, A.F., Niessen, W.J., Vincken, K.L., & Viergever, M.A. (1998). Multiscale vessel enhancement filtering. In Proceedings of the international conference on medical image computing and computer-assisted interventation (pp. 130–137).

- Halavi M, Hamilton KA, Parekh R, Ascoli GA. Digital reconstructions of neuronal morphology: three decades of research trends. Frontiers in Neuroscience. 2012;6(49):1–11. doi: 10.3389/fnins.2012.00049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen T, Neumann H. Neural mechanisms for the robust representation of junctions. Neural Computation. 2004;16(5):1013–1037. doi: 10.1162/089976604773135087. [DOI] [PubMed] [Google Scholar]

- He W, Hamilton TA, Cohen AR, Holmes TJ, Pace C, Szarowski DH, Turner JN, Roysam B. Automated three-dimensional tracing of neurons in confocal and brightfield images. Microscopy and Microanalysis. 2003;9(4):296–310. doi: 10.1017/S143192760303040X. [DOI] [PubMed] [Google Scholar]

- Ho SY, Chao CY, Huang HL, Chiu TW, Charoenkwan P, Hwang E. NeurphologyJ: an automatic neuronal morphology quantification method and its application in pharmacological discovery. BMC Bioinformatics. 2011;12(1):230. doi: 10.1186/1471-2105-12-230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoover A, Kouznetsova V, Goldbaum M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Transactions on Medical Imaging. 2000;19(3):203–210. doi: 10.1109/42.845178. [DOI] [PubMed] [Google Scholar]

- Iber D, Menshykau D. The control of branching morphogenesis. Open Biology. 2013;3(9):1–16. doi: 10.1098/rsob.130088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandel ER, Schwartz JH, Jessell TM. Principles of neural science. New York: McGraw-Hill; 2000. [Google Scholar]

- Kapur J, Sahoo PK, Wong AK. A new method for gray-level picture thresholding using the entropy of the histogram. Computer Vision, Graphics, and Image Processing. 1985;29(3):273–285. doi: 10.1016/0734-189X(85)90125-2. [DOI] [Google Scholar]

- Koene RA, Tijms B, van Hees P, Postma F, de Ridder A, Ramakers GJA, van Pelt J, van Ooyen A. NETMORPH: a framework for the stochastic generation of large scale neuronal networks with realistic neuron morphologies. Neuroinformatics. 2009;7(3):195–210. doi: 10.1007/s12021-009-9052-3. [DOI] [PubMed] [Google Scholar]

- Laganiere, R., & Elias, R. (2004). The detection of junction features in images. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (pp. 573–576).

- Leandro JJG, Cesar-Jr RM, da F Costa L. Automatic contour extraction from 2D neuron images. Journal of Neuroscience Methods. 2009;177(2):497–509. doi: 10.1016/j.jneumeth.2008.10.037. [DOI] [PubMed] [Google Scholar]

- Lee TC, Kashyap RL, Chu CN. Building skeleton models via 3-D medial surface axis thinning algorithms. CVGIP: Graphical Models and Image Processing. 1994;56(6):462–478. [Google Scholar]

- Lewis J. Fast normalized cross-correlation. Vision Interface. 1995;10(1):120–123. [Google Scholar]

- Lin, Y.C., & Koleske, A.J. (2010). Mechanisms of synapse and dendrite maintenance and their disruption in psychiatric and neurodegenerative disorders. Annual Review of Neuroscience, 33, 349. [DOI] [PMC free article] [PubMed]

- Liu Y. The DIADEM and beyond. Neuroinformatics. 2011;9(2–3):99–102. doi: 10.1007/s12021-011-9102-5. [DOI] [PubMed] [Google Scholar]

- Longair MH, Baker DA, Armstrong JD. Simple Neurite Tracer: open source software for reconstruction, visualization and analysis of neuronal processes. Bioinformatics. 2011;27(17):2453–2454. doi: 10.1093/bioinformatics/btr390. [DOI] [PubMed] [Google Scholar]

- Lu J, Fiala JC, Lichtman JW. Semi-automated reconstruction of neural processes from large numbers of fluorescence images. PLoS One. 2009;4(5):e5655. doi: 10.1371/journal.pone.0005655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luisi J, Narayanaswamy A, Galbreath Z, Roysam B. The FARSIGHT trace editor: an open source tool for 3-D inspection and efficient pattern analysis aided editing of automated neuronal reconstructions. Neuroinformatics. 2011;9(2–3):305–315. doi: 10.1007/s12021-011-9115-0. [DOI] [PubMed] [Google Scholar]

- Meijering E. Neuron tracing in perspective. Cytometry Part A. 2010;77(7):693–704. doi: 10.1002/cyto.a.20895. [DOI] [PubMed] [Google Scholar]

- Meijering E, Jacob M, Sarria JCF, Steiner P, Hirling H, Unser M. Design and validation of a tool for neurite tracing and analysis in fluorescence microscopy images. Cytometry Part A. 2004;58(2):167–176. doi: 10.1002/cyto.a.20022. [DOI] [PubMed] [Google Scholar]

- Mendel JM. Fuzzy logic systems for engineering: a tutorial. Proceedings of the IEEE. 1995;83(3):345–377. doi: 10.1109/5.364485. [DOI] [Google Scholar]

- Michaelis, M., & Sommer, G. (1994). Junction classification by multiple orientation detection. In Proceedings of the European conference on computer vision (pp. 101–108).

- Myatt DR, Hadlington T, Ascoli GA, Nasuto SJ. Neuromantic – from semi-manual to semi-automatic reconstruction of neuron morphology. Frontiers in Neuroinformatics. 2012;6(4):1–14. doi: 10.3389/fninf.2012.00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narayanaswamy A, Wang Y, Roysam B. 3-D image pre-processing algorithms for improved automated tracing of neuronal arbors. Neuroinformatics. 2011;9(2–3):219–231. doi: 10.1007/s12021-011-9116-z. [DOI] [PubMed] [Google Scholar]

- Narro ML, Yang F, Kraft R, Wenk C, Efrat A, Restifo LL. NeuronMetrics: software for semi-automated processing of cultured neuron images. Brain Research. 2007;1138:57–75. doi: 10.1016/j.brainres.2006.10.094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obara B, Fricker M, Gavaghan D, Grau V. Contrast-independent curvilinear structure detection in biomedical images. IEEE Transactions on Image Processing. 2012;21(5):2572–2581. doi: 10.1109/TIP.2012.2185938. [DOI] [PubMed] [Google Scholar]

- Obara, B., Fricker, M., & Grau, V. (2012b). Contrast independent detection of branching points in network-like structures. In Proceedings of SPIE medical imaging: image processing (p. 83141L).

- Peng H, Ruan Z, Long F, Simpson JH, Myers EW. V3D enables real-time 3D visualization and quantitative analysis of large-scale biological image data sets. Nature Biotechnology. 2010;28(4):348–353. doi: 10.1038/nbt.1612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peng H, Long F, Zhao T, Myers E. Proof-editing is the bottleneck of 3D neuron reconstruction: the problem and solutions. Neuroinformatics. 2011;9(2–3):103–105. doi: 10.1007/s12021-010-9090-x. [DOI] [PubMed] [Google Scholar]

- Peng H, Bria A, Zhou Z, Iannello G, Long F. Extensible visualization and analysis for multidimensional images using Vaa3D. Nature Protocols. 2014;9(1):193–208. doi: 10.1038/nprot.2014.011. [DOI] [PubMed] [Google Scholar]

- Pool M, Thiemann J, Bar-Or A, Fournier AE. NeuriteTracer: a novel ImageJ plugin for automated quantification of neurite outgrowth. Journal of Neuroscience Methods. 2008;168(1):134–139. doi: 10.1016/j.jneumeth.2007.08.029. [DOI] [PubMed] [Google Scholar]

- Powers DMW. Evaluation: from precision, recall, and F-measure to ROC, informedness, markedness and correlation. Journal of Machine Learning Technologies. 2011;2(1):37–63. [Google Scholar]

- Püspöki, Z., Vonesch, C., & Unser, M. (2013). Detection of symmetric junctions in biological images using 2-D steerable wavelet transforms. In Proceedings of the IEEE international symposium on biomedical imaging (pp. 1496–1499).

- Radojević, M., Smal, I., Niessen, W., & Meijering, E. (2014). Fuzzy logic based detection of neuron bifurcations in microscopy images. In Proceedings of the IEEE international symposium on biomedical imaging (pp. 1307–1310).

- Santamaría-Pang A, Hernandez-Herrera P, Papadakis M, Saggau P, Kakadiaris IA. Automatic morphological reconstruction of neurons from multiphoton and confocal microscopy images using 3D tubular models. Neuroinformatics. 2015;13(3):297–320. doi: 10.1007/s12021-014-9253-2. [DOI] [PubMed] [Google Scholar]

- Schmitt S, Evers JF, Duch C, Scholz M, Obermayer K. New methods for the computer-assisted 3-D reconstruction of neurons from confocal image stacks. NeuroImage. 2004;23(4):1283–1298. doi: 10.1016/j.neuroimage.2004.06.047. [DOI] [PubMed] [Google Scholar]

- Schneider CA, Rasband WS, Eliceiri KW. NIH Image to ImageJ: 25 years of image analysis. Nature Methods. 2012;9(7):671–675. doi: 10.1038/nmeth.2089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senft SL. A brief history of neuronal reconstruction. Neuroinformatics. 2011;9(2–3):119–128. doi: 10.1007/s12021-011-9107-0. [DOI] [PubMed] [Google Scholar]

- Sinzinger ED. A model-based approach to junction detection using radial energy. Pattern Recognition. 2008;41(2):494–505. doi: 10.1016/j.patcog.2007.06.032. [DOI] [Google Scholar]

- Ṡiṡková Z, Justus D, Kaneko H, Friedrichs D, Henneberg N, Beutel T, Pitsch J, Schoch S, Becker A, von der Kammer H, Remy S Dendritic structural degeneration is functionally linked to cellular hyperexcitability in a mouse model of Alzheimer’s disease. Neuron. 2014;84(5):1023–1033. doi: 10.1016/j.neuron.2014.10.024. [DOI] [PubMed] [Google Scholar]

- Smal I, Loog M, Niessen W, Meijering E. Quantitative comparison of spot detection methods in fluorescence microscopy. IEEE Transactions on Medical Imaging. 2010;29(2):282–301. doi: 10.1109/TMI.2009.2025127. [DOI] [PubMed] [Google Scholar]

- Sonka M, Hlavac V, Boyle R. Image processing, analysis, and machine vision. Florence: Cengage Learning; 2007. [Google Scholar]

- Steiner P, Sarria JCF, Huni B, Marsault R, Catsicas S, Hirling H. Overexpression of neuronal Sec1 enhances axonal branching in hippocampal neurons. Neuroscience. 2002;113(4):893–905. doi: 10.1016/S0306-4522(02)00225-7. [DOI] [PubMed] [Google Scholar]

- Su R, Sun C, Pham TD. Junction detection for linear structures based on Hessian, correlation and shape information. Pattern Recognition. 2012;45(10):3695–3706. doi: 10.1016/j.patcog.2012.04.013. [DOI] [Google Scholar]

- Svoboda K. The past, present, and future of single neuron reconstruction. Neuroinformatics. 2011;9(2):97–98. doi: 10.1007/s12021-011-9097-y. [DOI] [PubMed] [Google Scholar]

- Tsai CL, Stewart CV, Tanenbaum HL, Roysam B. Model-based method for improving the accuracy and repeatability of estimating vascular bifurcations and crossovers from retinal fundus images. IEEE Transactions on Information Technology in Biomedicine. 2004;8(2):122–130. doi: 10.1109/TITB.2004.826733. [DOI] [PubMed] [Google Scholar]

- Türetken E, González G, Blum C, Fua P. Automated reconstruction of dendritic and axonal trees by global optimization with geometric priors. Neuroinformatics. 2011;9(2–3):279–302. doi: 10.1007/s12021-011-9122-1. [DOI] [PubMed] [Google Scholar]

- Vasilkoski Z, Stepanyants A. Detection of the optimal neuron traces in confocal microscopy images. Journal of Neuroscience Methods. 2009;178(1):197–204. doi: 10.1016/j.jneumeth.2008.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weaver CM, Hof PR, Wearne SL, Lindquist WB. Automated algorithms for multiscale morphometry of neuronal dendrites. Neural Computation. 2004;16(7):1353–1383. doi: 10.1162/089976604323057425. [DOI] [PubMed] [Google Scholar]

- Xiao H, Peng H. APP2: automatic tracing of 3D neuron morphology based on hierarchical pruning of a gray-weighted image distance-tree. Bioinformatics. 2013;29(11):1448–1454. doi: 10.1093/bioinformatics/btt170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiong G, Zhou X, Degterev A, Ji L, Wong STC. Automated neurite labeling and analysis in fluorescence microscopy images. Cytometry Part A. 2006;69(6):494–505. doi: 10.1002/cyto.a.20296. [DOI] [PubMed] [Google Scholar]

- Yu, W., Daniilidia, K., & Sommer, G. (1998). Rotated wedge averaging method for junction characterization. In Proceedings of the IEEE computer society conference on computer vision and pattern recognition (pp. 390–395).

- Yuan X, Trachtenberg JT, Potter SM, Roysam B. MDL constrained 3-D grayscale skeletonization algorithm for automated extraction of dendrites and spines from fluorescence confocal images. Neuroinformatics. 2009;7(4):213–232. doi: 10.1007/s12021-009-9057-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zadeh LA. Fuzzy logic and approximate reasoning. Synthese. 1975;30(3–4):407–428. doi: 10.1007/BF00485052. [DOI] [Google Scholar]

- Zhang Y, Zhou X, Degterev A, Lipinski M, Adjeroh D, Yuan J, Wong STC. Automated neurite extraction using dynamic programming for high-throughput screening of neuron-based assays. NeuroImage. 2007;35(4):1502–1515. doi: 10.1016/j.neuroimage.2007.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou, J., Chang, S., Metaxas, D., & Axel, L. (2007). Vascular structure segmentation and bifurcation detection. In Proceedings of the IEEE international symposium on biomedical imaging (pp. 872–875).

- Zhou, Z., Liu, X., Long, B., & Peng, H. (2015). TReMAP: automatic 3D neuron reconstruction based on tracing, reverse mapping and assembling of 2D projections. Neuroinformatics. in press. [DOI] [PubMed]